Deep Evolution Network Structure Representation (DENSER) is a new method for automatically designing artificial neural networks (ANNs) using evolutionary algorithms. The algorithm not only searches for the best network topology, but also adjusts for hyperparameters (such as learning or data enhancement hyperparameters). In order to achieve automatic design, the model is divided into two different levels: the overall structure of the outer coded network, and the inner layer encodes the relevant parameters of each layer. The range of values ​​for layers and hyperparameters is defined by a human-readable, context-free grammar.

The main results of this paper are:

DENSER: A general framework based on evolutionary principles that automatically searches for structures and parameters suitable for large-scale deep networks with different layer types or targets;

The automatically generated Convolutional Neural Network (CNN) can effectively classify CIFAR-10 datasets without any prior knowledge, with an average accuracy of 94.27%.

The ANNs generated with DENSER performed very well. Specifically, the average accuracy on the CIFAR-100 data set was 78.75%, which was obtained through a network generated from the CIFAR-10 data set. To the best of our knowledge, this is the best result of the automated design of the CNN method on the CIFAR-100 dataset.

DENSER

In order to improve the structure and parameters of artificial neural networks, we propose the "Deep Evolution Network Structure Representation" (DENSER), which combines the basic ideas of genetic algorithms (GAs) and dynamic structured grammar evolution (DSGE).

Characterization

Each solution encodes the ANN through an ordered sequence of feedforward layers and their respective parameters; learning and any other hyperparameters can also be encoded separately. The characterization of candidate solutions is done at two different levels:

Genetic algorithm: The macro structure of the coding network, and is responsible for representing the order of the layers, which is then used as a sign of the grammar start symbol. It requires defining the structure allowed by the network, which is the effective order of the layers.

DSGE: Encodes the parameters associated with the layer. The parameters and their allowed values ​​or ranges are encoded in a syntax that must be defined by the user.

cross

Two crossover operators were proposed in this process, based on different genotype levels. In the context of current work, a module does not represent a layer group that can be replicated multiple times, but a layer that belongs to the same genetic algorithm structure index.

Considering that each operator has the same module (possibly different layers), the first crossover operator is a single-point intersection, which changes the layers between two individuals within the same module.

The second crossover operator is a unified intersection that changes the entire module between two individuals.

variation

To promote the evolution of artificial neural networks, we developed a set of mutation operators. At the genetic algorithm level, the mutation operator is designed to manipulate the network structure:

Add layer: Generate a new layer based on the start symbol likelihood of the module where the layer is to be placed;

Duplicate layers: Randomly select a layer and copy it to another valid location in the module. This copy is connected, and if the parameters in the layer change, all the duplicate layers will also change;

Delete layer: Select and delete a layer from the given module.

The above mutation operator is just a general structure in the network; to change the parameters of the layer, the following DSGE variants need to be used:

Grammatical variation: an expansion possibility is replaced by another;

Integer variation: Generate a new integer value within the allowed range;

Floating point variation: Gaussian perturbation for a given floating point value.

Experimental result

For testing, we performed CNN generation experiments on the CIFAR-10 dataset. There are 60,000 samples in CIFAR-10, each of which is a 32x32 RGB color image. These images are divided into 10 categories. The solution proposed by DENSER will be mapped to the Keras model to test their performance. The goal of the task is to measure the maximum accuracy of the model identification object.

In order to analyze the generalization and expansion capabilities of the network, we adopted the best CNN topology and classified the CIFAR-100 data set.

CNN Evolution on CIFAR-10

On the generated CNN, we performed 10 classification tests of the CIFAR-10 data set. For the generated networks, we analyze their fitness (the accuracy of the classification task) and the number of hidden layers.

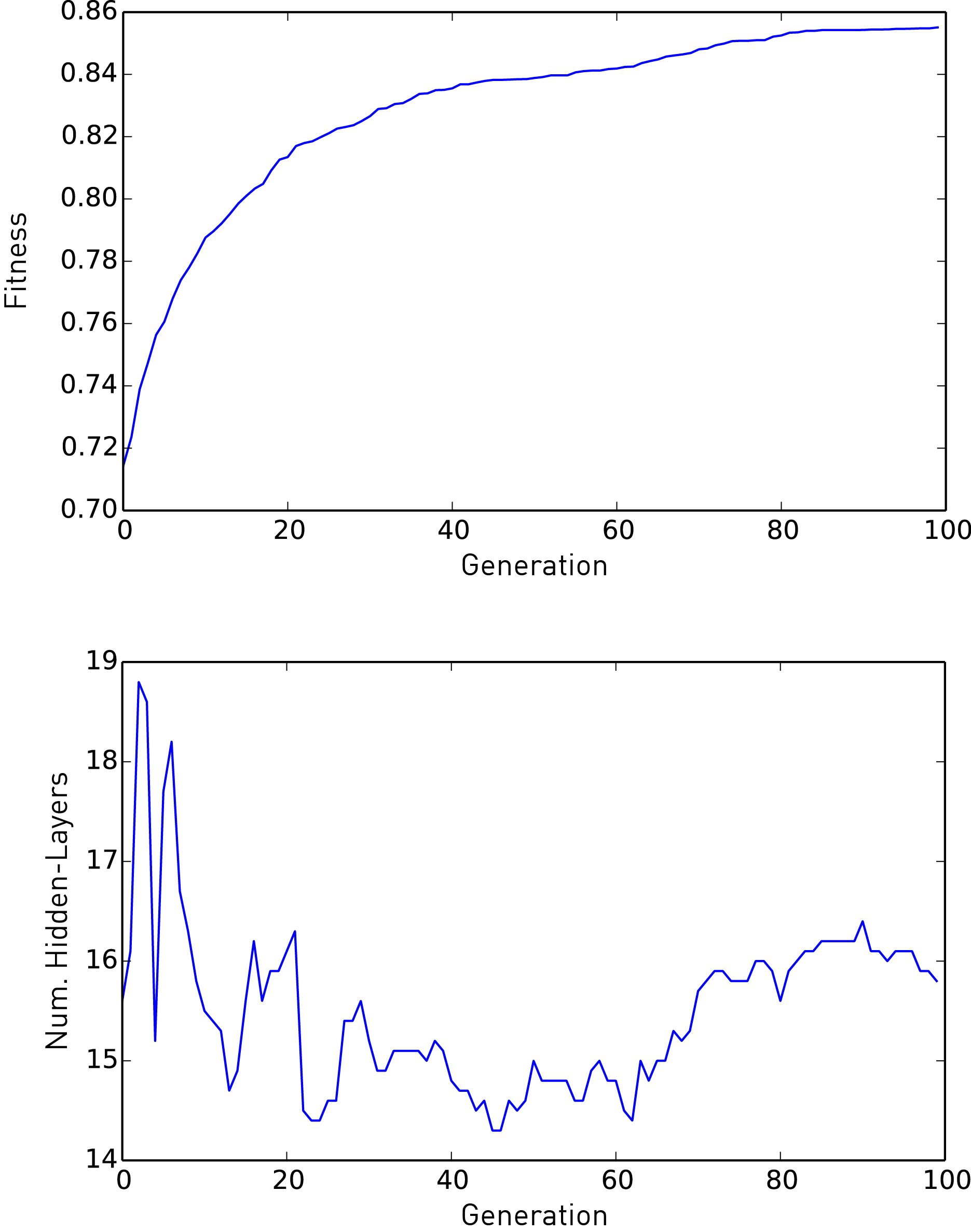

figure 1

Figure 1 depicts the evolution of the average fit and number of layers of the best CNN in an iteration. The results show that evolution is happening and solutions often appear at the 80th iteration. From the beginning of evolution to about the 60th time, the performance improvement is accompanied by the reduction of the number of layers; from the 60th time onwards, the performance improvement will lead to an increase in the number of hidden layers of the optimal network. This analysis shows that the first generation of randomly generated solutions has many layers and its parameters are randomly set.

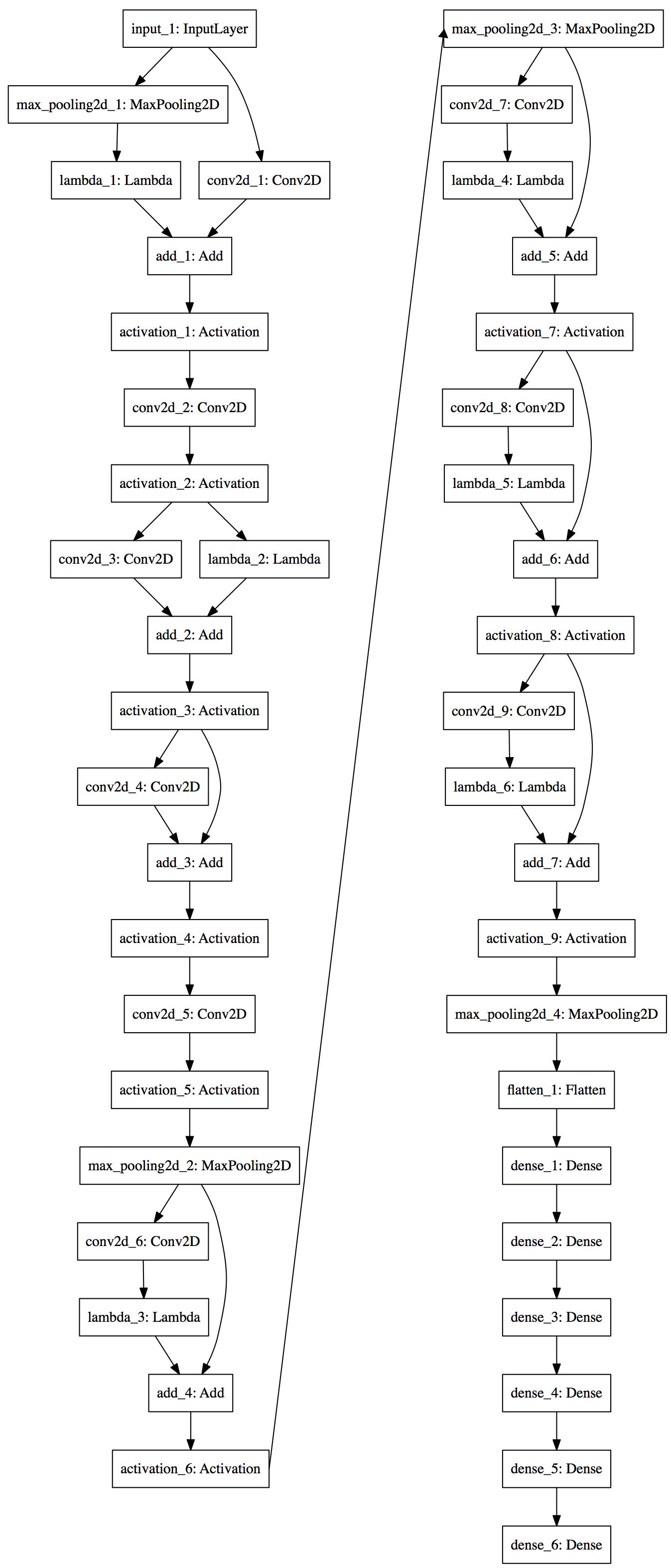

figure 2

The best network in the evolutionary process is shown in Figure 2 (according to accuracy). The most puzzling feature of evolutionary networks is the importance and number of dense layers used at the end of the topology. To the best of our knowledge, the order of use of such a large number of dense layers is unprecedented. It can even be said that humans will never come up with such a structure, so this evolutionary result is very meaningful.

Once the evolution process is complete, the best network generated each time will be retrained five times. First, we train the network with the same learning rate (lr=0.01), but this time it is not only tested 10 times, but 400 times. Then we will get an average classification accuracy of 88.41%. To further improve the accuracy of the network, we enhanced each sample in the test set to generate 100 enhanced images and predicted the average maximum confidence value on the 100 images. In the end, the average accuracy of the best evolutionary network increased to 89.93%.

To investigate whether it is possible to improve the performance of the best network, we use CGP-CNN to train them with the same strategy: the initial learning rate is 0.01, random change; the fifth time increases to 0.1; the 250th time is reduced again 0.01; eventually reduced to 0.001 at the 375th time. According to the previous training strategy, the average accuracy of the network increased to 93.38%. If the data in the data set is enhanced, the average accuracy is 94.13%, ie the average error is 5.87%.

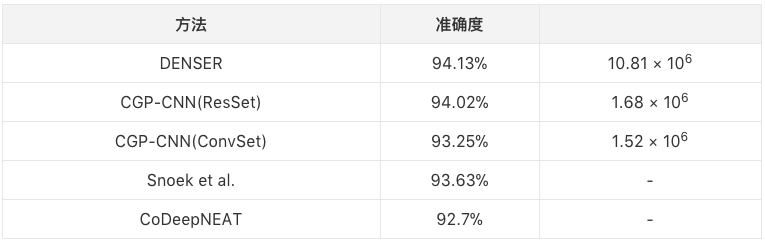

Comparison of CIFAR-10 results

The above table is a comparison of the best results generated by DENSER and other methods. It can be seen that DENSER has the highest accuracy. In addition, in our approach, the number of trainable parameters is much higher because we allow for the placement of fully connected layers in the evolved CNN. In addition, prior knowledge was not used in the evolution process.

Generalization capability on CIFAR-100

To test the generalization and scalability of evolutionary networks, we applied the best network generated on CIFAR-10 to CIFAR-100. In order to make the network work properly, we only changed the output neurons of the softmax layer from 10 to 100.

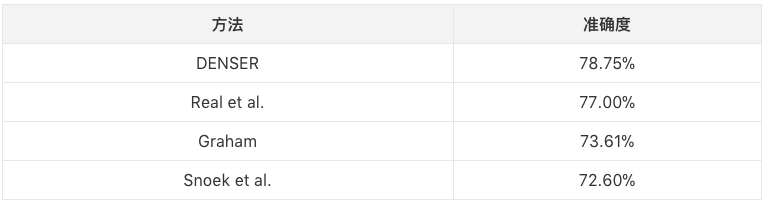

The training of each network is random; because of the increased data, the initial conditions are different, so training them to use different samples. Therefore, in order to further improve the results, we studied Graham's test of the performance of a part of the maximum pool is enough to improve the performance of our network. In short, instead of using a separate network, we use a collection where each network is the result of independent training. Using this method, the accuracy of the same single network trained five times is 77.51%; the accuracy of the set containing 10 networks is 77.89%; the accuracy of the set of 12 networks is 78.14%. These results are superior to the CNN method using standard data enhancement.

In addition, the same set of networks is not the same structure, but the two best network topologies built by DENSER. According to this method, the accuracy of the model we obtained was increased to 78.75%.

CIFAR-100 results

The above table shows the comparison of DENSER results on the CIFAR-100 dataset with other methods.

Shenzhen Uscool Technology Co., Ltd , https://www.uscoolvape.com